Contact centers rarely struggle because agents have “strong accents.” They struggle because listeners expend more cognitive effort than they should just to understand speech—especially at scale, under time pressure, and across global operations.

Most solutions labeled AI-powered accent neutralization promise clarity but quietly introduce new risks: robotic tone, delayed responses, agent fatigue, and customer distrust. The result is a familiar pattern—strong demos, weak pilots, and silent rollbacks.

This guide is written to correct that pattern.

Why “Accent” Is the Wrong Starting Point?

Accent is not a defect. It is a linguistic variation. What degrades customer experience is listener effort: the mental load required to decode unfamiliar phonetics under imperfect conditions.

In live contact center environments, listener effort spikes for a variety of reasons—background noise, call compression, script deviation, stress, and the cumulative fatigue of hundreds of calls per day. Teams that frame the problem as “accent removal” consistently end up optimizing for the wrong outcome: maximal transformation instead of minimal effort reduction.

Most competitors define the problem emotionally (“customers don’t like accents”). This framing is simplistic and increasingly risky as DEI considerations move to the center of workforce policy. The real issue is signal-processing and CX problem, not a cultural one.

“Listener effort is the metric that actually predicts communication breakdown. Accent is a surface feature—what taxes the listener is the combination of acoustic novelty, background noise, and processing speed under time pressure. When we measure comprehension difficulty in lab settings, accent alone accounts for a fraction of the variance. The operational conditions around the call matter far more.”

Defining “AI-Powered Accent Neutralization” for Contact Center

The market uses “accent neutralization” to describe at least three distinct approaches—and conflating them is one of the most common buying mistakes.

- Voice Replacement / Voice Conversion re-synthesizes speech into a different voice profile entirely. It offers high transformation but carries high risk. This approach is often unsuitable for regulated environments and raises the most serious questions about agent identity and trust.

- Accent Translation maps phonemes to a target accent profile. It’s less invasive than full voice replacement but can still distort prosody and emotional cues in ways that undermine the conversation.

- Accent Harmonization (Listener-Effort Reduction) is the most targeted approach. It micro-adjusts phonetic clarity while preserving the agent’s original voice identity. The focus is intelligibility, not transformation.

Many vendors market all three under the same banner of “accent neutralization,” creating false equivalence that makes evaluation nearly impossible. Before committing to a platform, ask vendors explicitly which layer they operate on and don’t accept a vague answer.

| Glossary: Neutralization vs. Conversion vs. Harmonization | |||

|---|---|---|---|

| Term | Description | Risk Level | Key Characteristics & Notes |

| Voice Conversion | Replaces the agent’s voice with a synthesized alternative voice profile. Maximum phonetic transformation. | High Risk | Raises legal and ethical concerns in regulated industries. Voice identity is not preserved. |

| Accent Translation | Maps individual phonemes from one accent profile to another. | Medium Risk | Agent voice partially preserved, but prosody (rhythm, stress, intonation) is frequently distorted as a side effect. |

| Accent Harmonization | Applies micro-adjustments to phonetic clarity without replacing the voice or altering prosodic structure. | Lower Risk | Preserves agent voice identity. Optimizes for listener intelligibility, not acoustic conformity. This is the approach most appropriate for live contact center use. |

Where Accent AI Breaks in Real Contact Centers

Demos rarely reveal operational failure. Here’s what actually goes wrong in production environments.

- Latency creep is among the most common issues. Delays above roughly 300–400 milliseconds disrupt natural turn-taking in conversation. Even modest latency introduced by AI processing can make calls feel stilted and unnatural.

- Prosody flattening is subtler but equally damaging. When AI smooths out acoustic variation to improve clarity, it often strips out the emotional cues—pace, pitch, rhythm—that agents rely on to convey empathy, urgency, and sincerity.

- Agent self-monitoring fatigue emerges over time. Agents become aware of the AI layer and begin unconsciously adjusting their speech to “help” the system—a cognitive overhead that compounds across a full shift.

- Repeat loops are the cruelest irony: misheard or distorted output causes customers to ask for clarification, which increases average time rather than reducing it.

Expert Perspective“We thought the agents would adapt. What we didn’t anticipate was the feedback loop—agents trying to speak ‘for the AI,’ the AI struggling to process modified input, and customers hearing something that felt machine-like. By week three, fatigue scores were the highest we’d seen in the queue all year. The tool wasn’t the problem. Deploying it without behavioral guardrails was.”

When Should Accent Neutralization Not Be Used?

Accent AI is not always the right tool, and in many cases, it will make things worse.

Avoid deploying accent AI when agents already score well on intelligibility metrics—there’s no listener effort problem to solve and adding a transformation layer introduces risk without benefit. Calls requiring emotional nuance, such as escalations or complaints, are poor candidates because prosody carries meaning that AI processing can distort. Regulatory environments that prohibit voice alteration make the decision simple. And if latency budgets are already stretched, adding real-time voice processing will push them over the edge.

In several observed environments, removing a previously deployed accent AI tool improved agent confidence and call flow measurably. The instinct to add technology is not always right.

What Buyers Should Measure Instead of “Accent Reduction”

Most vendor evaluations rely on demo-environment metrics that don’t survive contact with production reality. Here’s what to track instead.

- Listener effort scores from post-call QA tagging give you a direct read on whether comprehension improved.

- Repeat rate per call tells you whether customers are asking for clarification often—a concrete proxy for clarity.

- Agent self-reported cognitive load catches the fatigue problem before it shows up in attrition data.

- MOS (Mean Opinion Score) deltas under load—not in isolation—reveal how voice quality holds up when infrastructure is stressed.

- Latency variance, not just average latency, captures the tail behavior that disrupts conversations.

Teams that tracked only CSAT missed early warning signs of agent strain. By the time satisfaction scores shifted, the damage was already embedded in operations.

Governance, Bias, and Trust Implications

This is the topic most vendors prefer to avoid. That’s exactly why it deserves direct attention.

Accent modification can reinforce bias if mispositioned. When framed as correcting how agents sound rather than improving how listeners receive speech, it sends a message—to agents, to customers, and to regulators—that certain voices are less acceptable. Also, unconscious accent bias in hiring and performance leads to ‘glass ceiling’ for diverse talent, where linguistic profiling prematurely halts the career progression of highly skilled individuals.

Transparency with agents matters more than customer disclosure. Trust erosion occurs faster internally than externally. If agents feel monitored, altered, or misrepresented by the technology they’re required to use, you will see it in engagement, attrition, and performance before you see it anywhere else.

Governance frameworks should define not just whether to deploy accent AI, but when and where it is active—and who has visibility into that.

How to Pilot Accent AI Without Damaging CX or Trust?

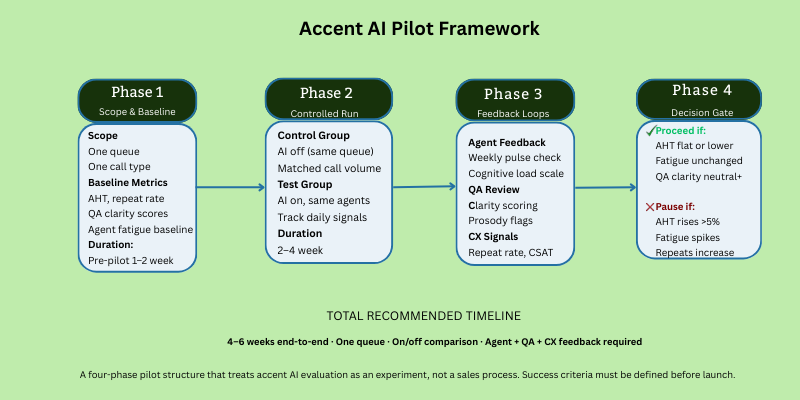

The best way to evaluate accent AI is to run a controlled pilot—not a showcase.

Structure the pilot with tight scope: one queue, one call type. Run it for two to four weeks. Use on/off comparison so you have a genuine control group. Build feedback loops that include agents, QA reviewers, and CX data, not just one of the three.

Define success criteria before you start. The minimum bar should be no increase in average handle time, no rise in agent fatigue indicators, and neutral or improved QA clarity scores. If a pilot can’t clear that bar, the tool isn’t ready for your environment—regardless of how it performed in the demo.

Run pilots like experiments, not showcases.

A More Responsible Way to Think About Accent AI

Accent AI for contact center can reduce listener effort and improve operational consistency. Used indiscriminately, it can erode trust with customers and with the agents who power your operation.

The future of this category belongs to approaches that preserve voice identity, respect human variability, and optimize for effort reduction rather than sameness. Solutions built around harmonization rather than replacement align more closely with that philosophy—but outcomes still depend entirely on how responsibly teams deploy them.

If your strategy starts with “neutralizing accents,” you are already solving the wrong problem. Start instead with clarity, effort, and trust—and evaluate every tool through that lens.

Evaluate Accent AI Without Guesswork or Overprocessing

If you’re considering AI-powered accent neutralization to reduce listener effort without distorting agent voice, trust, or call flow. Accent Harmonizer by Omind is designed to support this kind of evaluation approach.